Father

Professional

- Messages

- 2,602

- Reaction score

- 837

- Points

- 113

The new method allows you to decrypt messages in a chatbot dialog.

Specialists of the Israeli company Offensive AI Lab have published a technique that allows you to decipher texts from intercepted chatbot messages. Kaspersky Lab detailed the details of the study.

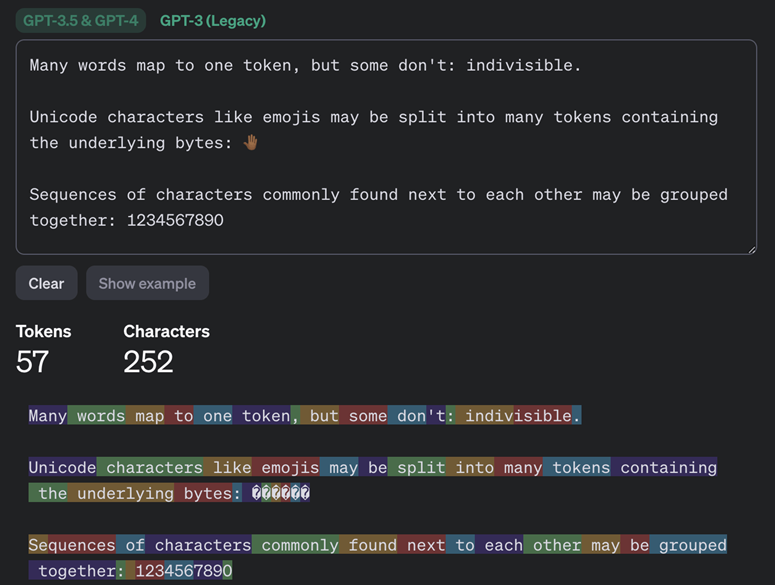

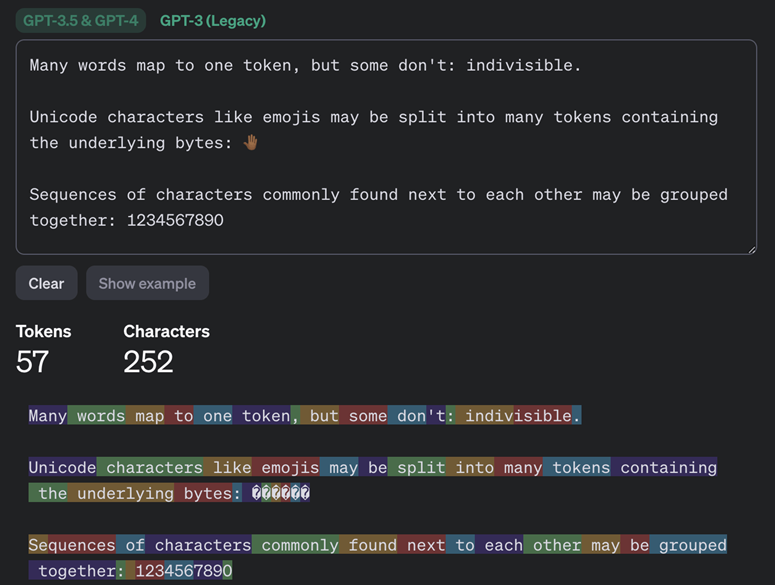

The new technique is a Side-channel attack based on the analysis of token lengths in encrypted messages. Since chatbots using large language models (LLMs) do not transmit information in tokens (sequences of characters found in typing) rather than words or symbols, studying the length of tokens allows you to guess the content of messages. The OpenAI website has a "Tokenizer" that lets you understand how this works.

Tokenization of messages by GPT-3.5 and GPT-4 models

The main vulnerability is that chatbots send tokens sequentially, without using compression or encoding methods, which makes it easier for attackers. Some chatbots (such as Google Gemini) are protected from this type of attack, but most others were vulnerable.

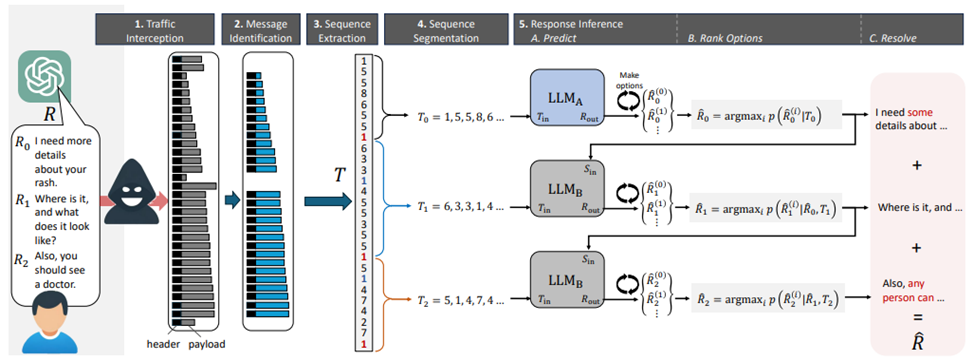

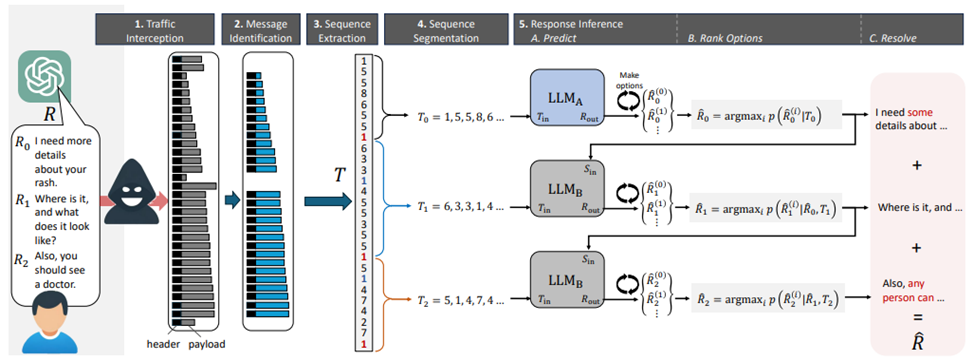

To restore the text, the researchers used two LLM models, one of which specialized in restoring standard introductory messages, the second-on the further text of communication. The efficiency of text recovery was about 29%, and guessing the general topic of conversation was about 55%.

Attack pattern

A special feature of this attack is its dependence on the language of communication: it is most effective for English because of the characteristic long tokens, while for other languages, including Russian, the effectiveness of the attack is noticeably lower.

Even languages that are close to English from the Germanic and Romance groups have tokens that are 1.5-2 times shorter on average. In Russian, the average token is even shorter — it is usually only a couple of characters long, which significantly reduces the potential effectiveness of an attack.

It is worth emphasizing that using this method is unlikely to reliably reveal specific details such as names, numeric values, dates, addresses, and other critical data.

In response to the publication of this technique, chatbot developers, including Cloudflare and OpenAI, began implementing a method for adding "garbage" data (padding), which reduces the likelihood of a successful attack. Probably, other chatbot developers will also implement protection to make communication with chatbots safer.

Specialists of the Israeli company Offensive AI Lab have published a technique that allows you to decipher texts from intercepted chatbot messages. Kaspersky Lab detailed the details of the study.

The new technique is a Side-channel attack based on the analysis of token lengths in encrypted messages. Since chatbots using large language models (LLMs) do not transmit information in tokens (sequences of characters found in typing) rather than words or symbols, studying the length of tokens allows you to guess the content of messages. The OpenAI website has a "Tokenizer" that lets you understand how this works.

Tokenization of messages by GPT-3.5 and GPT-4 models

The main vulnerability is that chatbots send tokens sequentially, without using compression or encoding methods, which makes it easier for attackers. Some chatbots (such as Google Gemini) are protected from this type of attack, but most others were vulnerable.

To restore the text, the researchers used two LLM models, one of which specialized in restoring standard introductory messages, the second-on the further text of communication. The efficiency of text recovery was about 29%, and guessing the general topic of conversation was about 55%.

Attack pattern

A special feature of this attack is its dependence on the language of communication: it is most effective for English because of the characteristic long tokens, while for other languages, including Russian, the effectiveness of the attack is noticeably lower.

Even languages that are close to English from the Germanic and Romance groups have tokens that are 1.5-2 times shorter on average. In Russian, the average token is even shorter — it is usually only a couple of characters long, which significantly reduces the potential effectiveness of an attack.

It is worth emphasizing that using this method is unlikely to reliably reveal specific details such as names, numeric values, dates, addresses, and other critical data.

In response to the publication of this technique, chatbot developers, including Cloudflare and OpenAI, began implementing a method for adding "garbage" data (padding), which reduces the likelihood of a successful attack. Probably, other chatbot developers will also implement protection to make communication with chatbots safer.