Carding 4 Carders

Professional

Is transparency in language models really vital? Or can you do without it?

Researchers at Stanford University recently presented a report-an index called the Foundation Model Transparency Index, or FMTI-that evaluates the transparency of basic artificial intelligence models. The authors urge corporations to disclose more information, such as data and human labor used to train models.

Stanford Professor Percy Liang, one of the researchers, said: "Over the past three years, it has become clear that the transparency of AI models is decreasing, while their capabilities are growing. This is problematic, as in other areas, such as social media, the lack of transparency has already led to undesirable consequences."

Basic models (large language models, LLMs) are AI systems trained on huge data sets, capable of performing various tasks from writing texts to programming. These include, for example, GPT-4 from OpenAI, Llama-2 from Meta*, and LaMDA from Google.

Companies that develop such models are leading the development of generative AI, which since the launch of ChatGPT by OpenAI, with the active support of Microsoft, has attracted the attention of businesses of various scales.

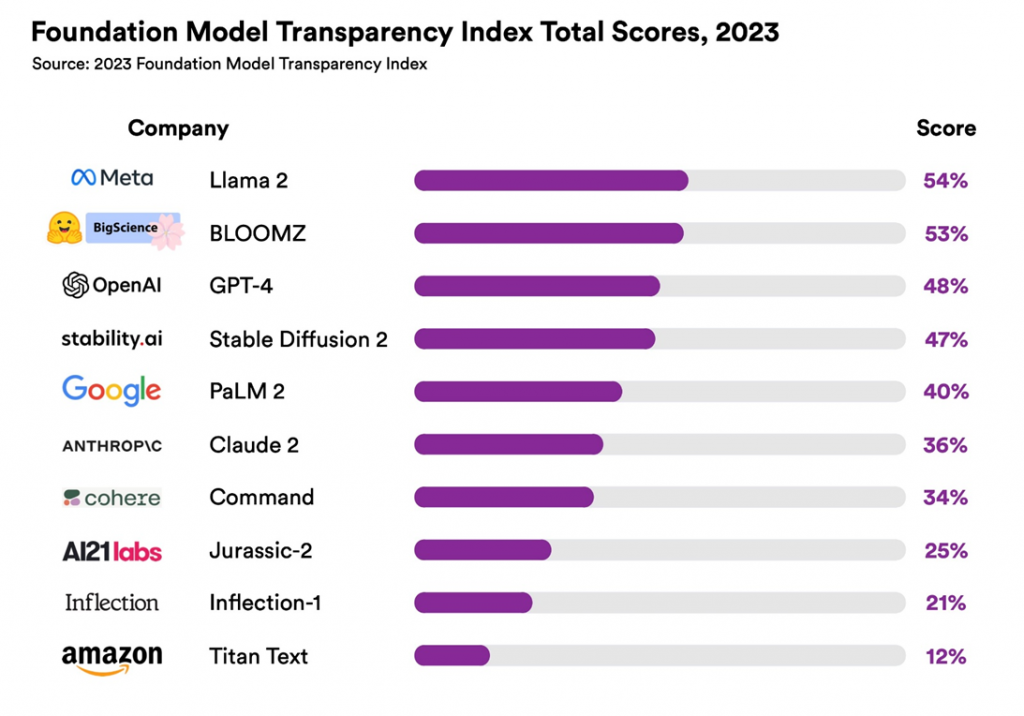

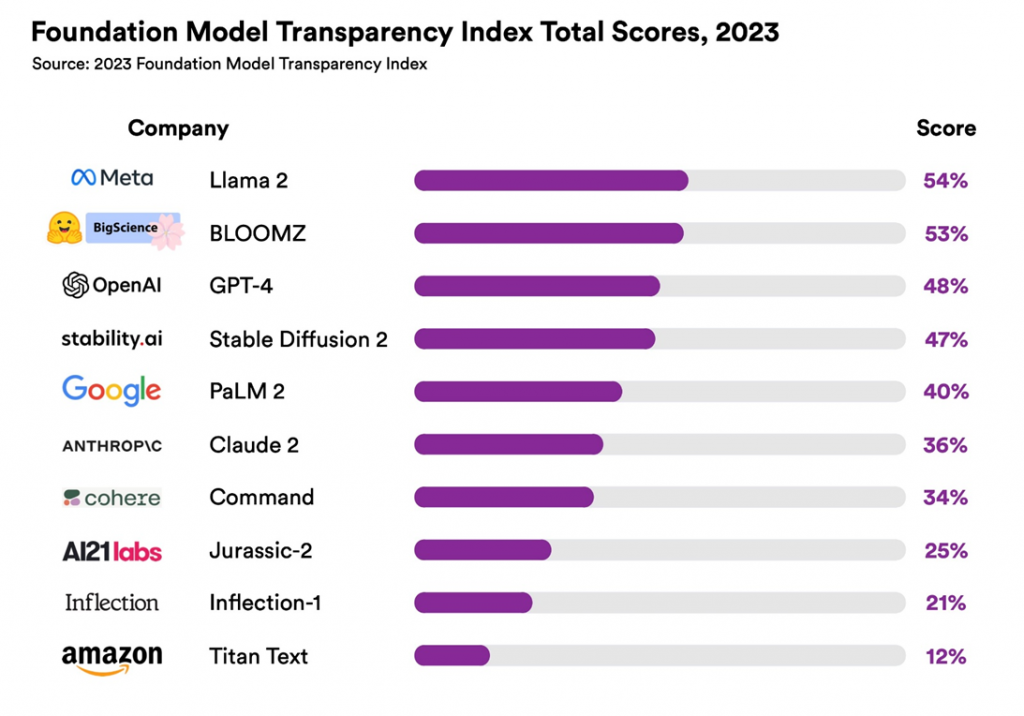

The index evaluated 10 popular models based on 100 different transparency indicators. All models showed "low" results: even the most transparent model, Llama 2 from Meta, received only 54 points out of 100. While the Titan model from Amazon took the last place with a ridiculous 12 points.

As the world increasingly depends on these models for decision-making, understanding their limitations and biases becomes critical, the index's authors emphasize. The researchers also expressed the hope that their report will encourage companies to become more transparent about their underlying models and provide a starting point for states looking for ways to regulate this rapidly growing field.

The index is a project of the Stanford Institute for Human-Centered Artificial Intelligence's Center for Fundamental Model Research.

Researchers at Stanford University recently presented a report-an index called the Foundation Model Transparency Index, or FMTI-that evaluates the transparency of basic artificial intelligence models. The authors urge corporations to disclose more information, such as data and human labor used to train models.

Stanford Professor Percy Liang, one of the researchers, said: "Over the past three years, it has become clear that the transparency of AI models is decreasing, while their capabilities are growing. This is problematic, as in other areas, such as social media, the lack of transparency has already led to undesirable consequences."

Basic models (large language models, LLMs) are AI systems trained on huge data sets, capable of performing various tasks from writing texts to programming. These include, for example, GPT-4 from OpenAI, Llama-2 from Meta*, and LaMDA from Google.

Companies that develop such models are leading the development of generative AI, which since the launch of ChatGPT by OpenAI, with the active support of Microsoft, has attracted the attention of businesses of various scales.

The index evaluated 10 popular models based on 100 different transparency indicators. All models showed "low" results: even the most transparent model, Llama 2 from Meta, received only 54 points out of 100. While the Titan model from Amazon took the last place with a ridiculous 12 points.

As the world increasingly depends on these models for decision-making, understanding their limitations and biases becomes critical, the index's authors emphasize. The researchers also expressed the hope that their report will encourage companies to become more transparent about their underlying models and provide a starting point for states looking for ways to regulate this rapidly growing field.

The index is a project of the Stanford Institute for Human-Centered Artificial Intelligence's Center for Fundamental Model Research.